A team of Korean engineering researchers has developed a quadrupedal robot technology that can climb up and down the steps and moves without falling over in uneven environments such as tree roots without the help of visual or tactile sensors even in disastrous situations in which visual confirmation is impeded due to darkness or thick smoke from the flames.

Professor Hyun Myung’s research team at the Urban Robotics Lab in the School of Electrical Engineering is behind the walking robot control technology that enables robust “blind locomotion” in various atypical environments.

The KAIST research team developed DreamWaQ technology, which was named so as it enables walking robots to move about even in the dark, just as a person can walk without visual help fresh out of bed and going to the bathroom in the dark. With this technology installed atop any legged robots, it will be possible to create various types of DreamWaQers.

Existing walking robot controllers are based on kinematics and/or dynamics models. This is expressed as a model-based control method. In particular, on atypical environments like the open, uneven fields, it is necessary to obtain the feature information of the terrain more quickly in order to maintain stability as it walks. However, it has been shown to depend heavily on the cognitive ability to survey the surrounding environment.

In contrast, the controller developed by Professor Hyun Myung’s research team based on deep reinforcement learning (RL) methods can quickly calculate appropriate control commands for each motor of the walking robot through data of various environments obtained from the simulator. Whereas the existing controllers that learned from simulations required a separate re-orchestration to make it work with an actual robot, this controller developed by the research team is expected to be easily applied to various walking robots because it does not require an additional tuning process.

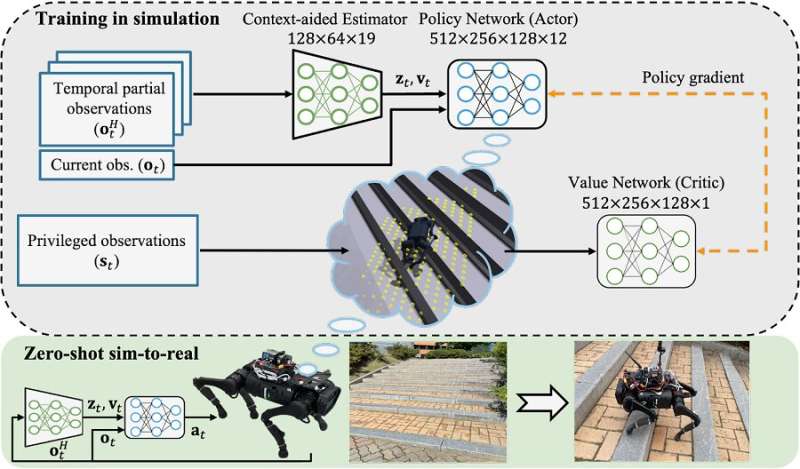

DreamWaQ, the controller developed by the research team, is largely composed of a context estimation network that estimates the ground and robot information and a policy network that computes control commands. The context-aided estimator network estimates the ground information implicitly and the robot’s status explicitly through inertial information and joint information. This information is fed into the policy network to be used to generate optimal control commands. Both networks are learned together in the simulation.

While the context-aided estimator network is learned through supervised learning, the policy network is learned through an actor-critic architecture, a deep RL methodology. The actor network can only implicitly infer surrounding terrain information. In the simulation, the surrounding terrain information is known, and the critic, or the value network, that has the exact terrain information evaluates the policy of the actor network.

This whole learning process takes only about an hour in a GPU-enabled PC, and the actual robot is equipped with only the network of learned actors. Without looking at the surrounding terrain, it goes through the process of imagining which environment is similar to one of the various environments learned in the simulation using only the inertial sensor (IMU) inside the robot and the measurement of joint angles. If it suddenly encounters an offset, such as a staircase, it will not know until its foot touches the step, but it will quickly draw up terrain information the moment its foot touches the surface. Then the control command suitable for the estimated terrain information is transmitted to each motor, enabling rapidly adapted walking.

The DreamWaQer robot walked not only in the laboratory environment, but also in on outdoor environment around the campus with many curbs and speed bumps, and over a field with many tree roots and gravel, demonstrating its abilities by overcoming a staircase with a difference of a height that is two-thirds of its body. In addition, regardless of the environment, the research team confirmed that it was capable of stable walking ranging from a slow speed of 0.3 m/s to a rather fast speed of 1.0 m/s.

The results of this study are available in a paper published on the arXiv preprint server and have been accepted to be presented at the upcoming IEEE International Conference on Robotics and Automation (ICRA) scheduled to be held in London at the end of May.

More information: I Made Aswin Nahrendra et al, DreamWaQ: Learning Robust Quadrupedal Locomotion With Implicit Terrain Imagination via Deep Reinforcement Learning, arXiv (2023). DOI: 10.48550/arxiv.2301.10602

Journal information: arXiv

Provided by KAIST (Korea Advanced Institute of Science and Technology)

Citation: DreamWaQer: A quadrupedal robot that can walk in the dark (2023, May 18) retrieved 18 May 2023 from https://techxplore.com/news/2023-05-dreamwaqer-quadrupedal-robot-dark.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.